Mirrors Of The Future - Some Thoughts On Long-Term Planning

Monday, 20 December 2021By Michael Mainelli

[An edited version of this blog appeared as "Mirrors Of The Future", Archives of IT (20 December 2021).]

A general observation across several industries is that strategists look as far behind as they look ahead. Oil industry strategy departments look a century ahead, so their beach book conversations are often peppered with references to inter-war histories to read over the holidays. The City of London’s Remembrancer (effectively its strategist) looks centuries ahead to the future of the Square Mile and is familiar with, and uses, precedents from a time-frame of over 14 centuries, right back to the Court of Hustings, still going today.

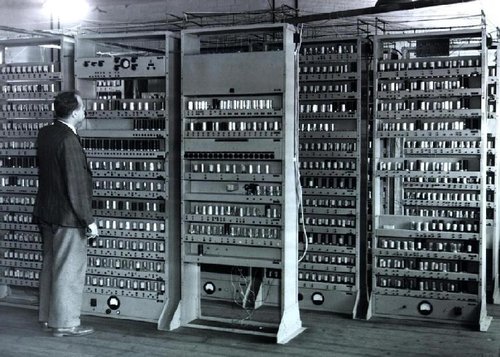

The information and communications technology (ICT) sector traditionally measures its strategy in shorter time-spans. The next big thing appears at next year’s Consumer Electronics Show in Las Vegas. Once the next big thing emerges, ICT strategists have often got away with just invoking ‘smaller, cheaper, faster’. Whatever is trending will follow some form of learning curve, Moore’s law if you will, that the size and price of current kit will drop exponentially while performance dramatically improves, until the next next big thing arrives.

However, as ICT matures, time-frames are lengthening. Major shifts to the cloud, to cellular networks, to the metaverse, or to quantum computing, are bigger infrastructure decisions requiring more analysis and more thought over longer timescales. Longer time-frames increase the importance of history.

The adage “history does not repeat itself, but it often rhymes” implies that there is value in understanding history to help us deal with the future. However, ICT history is sparse, until recently seen as of little value. It’s hard to look far forward when it’s so tough to look just a little ways back. There are computing museums of course, but we are in the early days of systematic collections of organised materials for serious study and research.

The ‘information’ part of ICT means that concepts and ideas, mindsets if you will, are just as important as the technology. Further, as the technology is useless without software, understanding ICT history depends on being able to conceive the outputs and controls from hundreds of thousands of lines of code of decades ago running on machines that no longer exist.

The Archives of IT works hard trying to preserve snapshots of those mindsets over the years. A good example is a treasure trove of a few hundred Butler Cox Foundation reports. The Butler Cox Foundation advised ICT directors on trends from 1977 to 1991. Online, the reports are well-organised and easy-to-browse. A bit of hop-scotching includes Report 26: “Trends In Voice Communications Systems” (1981), that opens with “The user’s needs for telephone service can be described in terms of four factors: Cost, Availability, Reliability, Service facilities”. Plus ça change.

One of the earliest papers, “Trends In Office Automation Technologies” (1977) gives a good summary of then views on text origination, i.e. entering text into computer systems. “The electronic tablet is unlikely to have any significant impact in this area for the simple reason that it is unlikely to prove cheaper than the alternatives, and its speed is limited to handwriting speed. Nor is voice input to a speech recognising machine likely to have any significant impact in the foreseeable future.” Ultimately, text entry remains a problem, but a diminishing one as the population adopted ‘texting’ en masse, speech recognition improved, and automated transcription of chat is becoming mainstream.

Some predictions were way off, for example on videotex. For those unfamiliar with that technology, videotex provided interactive content screen-by-screen, displayed on a video monitor such as a television, typically using modems to send data in both directions. “Videotex In Europe” (1985) was the eighteenth report in Butler Cox’s ongoing programme of videotex research since 1980. For those unfamiliar with technology hype, it was amazing at the time to see so many technology forecasters obsessed with the rise of videotex, a large, centralised systems approach suited to Post, Telegraph & Telephone (PTT) monopolies. This author was working at ISTEL, a subsidiary of British Leyland, which provided commercial videotex systems based on Aregon, for example, showrooms sharing data about the availability of automobiles nationwide. This author also implemented videotex systems on automobile paintshop assembly lines using BBC micros. At the same time, your author ran a packet-switch-stream internet connection from his home. Which technology would succeed, centralised videotex systems or decentralised internet systems you could install at home yourself?

Prestel was the UK Post Office’s videotex system, launched in 1979. It achieved a maximum of 90,000 subscribers in the UK and was eventually sold by BT in 1994. The French videotex system, Teletel, with its Minitel terminals for homes, had substantial government support. Millions of telephone subscribers were given free Minitel terminals and, heavily subsidised by the state, it was quite popular for a number of years. Nevertheless, Minitel succumbed to the Internet and shut down in 2012.

Butler Cox’s report contains no mention of the Internet, concluding, “Finally, videotex is also being taken increasingly seriously by users themselves. Especially as a result of the recent microcomputer revolution, users are becoming much more aware of how technology can benefit them. … Because of these and other related trends, we believe the outlook for videotex in Europe is extremely healthy for the next five years. (It may not, however, be as rosy as some European PTTs may believe or wish their potential customers to believe.) The spectacular success of the French Minitel distribution programme and the flourishing market for private videotex systems endorses our view that 1983/84 has marked the turning point for the videotex industry in Europe.” See Full Report Here.

Well, while you can’t get everything right missing the Internet in its 16th year does seem a big miss. Yet, as a whole, the papers are strikingly well-written and clear-sighted. A particularly prescient paper in the collection might be “Escaping From Yesterday’s Systems” (1984) by George Cox in which he concludes, “I believe the main features of the future environment will be: Continued choice of standards; Continued technical advance; Changing supplier alliances and positions, with a growing, and eventually major, Japanese input.”

Japanese hegemony and Japan’s now-derided Fifth Generation Plan for computing loomed large in many reports, but some advice stands the test of time despite these anachronisms about Japanese domination, like this from Report 39: “Trends In Information Technology” (1984)

“Six key policy areas to which management should pay close attention in an era of fast-changing technology.

- Watch for the key technology changes. Keep an informed eye, for instance, on the progress of the Japanese Fifth Generation Plan and on steps towards comprehensive standards for Open Systems Interconnection.

- Keep up-to-date with long-term work towards improved software tools. The key task is to understand the structure of users’ needs. This understanding will come only from patient thought and consideration.

- Monitor changes in the shape of the budget for information systems. If trends continue, how may the budget look five years from now?

- Extend the concept of the Information Centre to embrace more, and more senior, end users. Whether the support service to end users is called an Information Centre or not is not important. What matters is that it should seek out and stimulate users rather than simply respond to cries for help.

- Identify key suppliers whose demise would prove embarrassing. Try to monitor their survival prospects by tapping sources of information. Investment analysts reports are sometimes helpful.

- Reflect upon the emergence of two roles for the data processing manager; director of technical services, and director of information. Can these roles reasonably be combined in your own organisation?”

The Butler Cox Publications are well worth trawling, from mining pertinent snippets of the past for future presentations, to seeing the correct and incorrect predictions of the past, to longitudinal research on what ICT management issues matter over the decades. Winston Churchill remarked, “The longer you can look back, the farther you can look forward.” The value of the Archives of IT will clearly continue to grow over time.

Professor Michael Mainelli is Executive Chairman of Z/Yen Group. His book, The Price Of Fish: A New Approach To Wicked Economics & Better Decisions, written with Ian Harris, won the Independent Publisher Book Awards Finance, Investment & Economics Gold Prize. Michael was interviewed for Archives of IT in 2021 – see full interview here.