Beyond LLMs - The Primordial Ooze Versus Intelligent Design Of AI

Thursday, 05 February 2026By Professor Michael Mainelli

Introduction

Large Language Models are astonishing. They write essays, pass exams, and occasionally apologise with more sincerity than most institutions. We assume intelligence is just autocomplete with confidence. But LLMs are to artificial intelligence what the speaking clock was to timekeeping: impressive and useful but not remotely the whole story.

Beyond LLMs may lie systems that reason, remember, perceive, adapt, and occasionally don’t hallucinate. Such models may not need to ingest all of digitised human learning but, similar to us, have to reason and act with a grotesquely limited set of information to set and achieve goals. Can we move to models that understand the world and not just remix it?

Where were we?

The artificial neuron network was invented in 1943 by Warren McCulloch and Walter Pitts in “A Logical Calculus Of The Ideas Immanent In Nervous Activity”. In 1957, Frank Rosenblatt at the Cornell Aeronautical Laboratory simulated the perceptron on an IBM 704. Later, he obtained funding by the Information Systems Branch of the United States Office of Naval Research and the Rome Air Development Center, to build a custom-made computer, the Mark I Perceptron. It was first publicly demonstrated on 23 June 1960. We’ve had several springs and winters for AI since.

[co-created with ChatGPT]

Long before trading, accountancy, and politics, I began playing with machine learning from a statistical perspective and neural nets from a computing perspective. In 1979 I built a then sizable image recognition neural net on a PDP-20 in Fortran, later playing with Lisp. However, I gave up on neural nets expressing to my research colleagues at Harvard and the Aiken Computation Laboratory that “the next 30 years of research will be spent trying to figure out how they’re doing what they’re doing”.

My path was more down machine-learning techniques, flirting with expert systems in the early 1980s, then KNN approaches and a period building some of the earliest support vector machine systems outside of Russia.

Where are we now?

Since 30 November 2022’s release of ChatGPT, the world has been dominated by generative AI and quite specifically LLMs. Interestingly, in some quarters, people seem to believe that to retain economic value, people will simply succeed by following anthropologist Claude Lévi-Strauss’s dictum, “Le savant n’est pas l’homme qui fournit les vraies responses, c’est celui qui pose les vraies questions.” “The learned man is not the man who provides the correct responses, rather he is the man who poses the right questions.” I believe that AI is not yet close to that stage given its known instabilities and limitations.

How did we get here?

It’s been a long, slow road, both before I left and since 1979. Wikipedia acknowledges two major ‘winters’ from approximately 1974–1980 and 1987–2000, and several smaller episodes, including:

- 1966: failure of machine translation

- 1969: criticism of perceptrons

- 1971–75: DARPA's frustration with the Speech Understanding Research program at Carnegie Mellon University

- 1973: large decrease in AI research in the United Kingdom in response to the Lighthill report

- 1973–74: DARPA's cutbacks to academic AI research in general

- 1987: collapse of the LISP machine market

- 1988: cancellation of new spending on AI by the Strategic Computing Initiative

- 1990s: many expert systems were abandoned

- 1990s: end of the Fifth Generation computer project's original goals

My observations since 1979 included:

- 1980: Neocognitron

- 1982: Japanese Fifth Generation generation project launched with a heavy emphasis on expert systems approaches

- 1982: Hopfield Networks

- 1986: Backpropagation Popularized

The Second "AI Winter" & Continued Research (1990s)

- 1991: Vanishing Gradient: Sepp Hochreiter identifies the "vanishing gradient problem," explaining why training very deep networks was difficult.

- 1997: Long Short-Term Memory (LSTM) networks solve the vanishing gradient problem and allowing efficient training of Recurrent Neural Networks on long sequences.

The Deep Learning Revolution (2000s to 2010s)

- 2006: Deep Belief Networks

By 2009 I didn’t feel that my 1979 assessment was wrong.

- 2009 to 2012: GPU Acceleration

- 2012: AlexNet: a Convulutional Neural Network that destroys previous accuracy records and marks the start of the "deep learning" era

- 2014: GANs: Ian Goodfellow et al. propose Generative Adversarial Networks (GANs), allowing networks to generate new data

- 2015: Residual Neural Networks (ResNet), using "skip connections" to enable training of extremely deep (152+ layers) networks

The Modern Era: Transformers & LLMs (2017 to Present)

- 2017: Transformer Architecture: Ashish Vaswani et al. at Google publish "Attention Is All You Need," introducing the Transformer architecture, which replaces RNNs with self-attention mechanisms.

- 2018–2020: BERT & GPT: Large language models like BERT (Google) and GPT-3 (OpenAI) are released.

- 2022: Diffusion Models & ChatGPT: Text-to-image models (Stable Diffusion, DALL-E) and conversational AI (ChatGPT) go mainstream, powered by massive, scalable Transformer architectures.

- 2024–2025: Multimodal & Agentic AI: Models become multimodal (handling text, images, video simultaneously) and focus shifts towards "agentic" AI, which can reason and act autonomously.

In summary, AI evolution has relied on five factors, algorithms, chips, connectivity, energy, and data.

- Algorithms: fundamental approaches haven't progressed as much as people think - innovation is in application – and as the history above shows, has been incremental for the most part.

- Chips: an accidental gaming architecture that turned about to be appropriate and became standard.

- Connectivity: the most underappreciated enabler, transforming isolated experiments into global platforms.

- Energy: intense consumption has created business models where people sell paper in revenue-negative companies to subsidise grotesquely energy-expensive queries in hopes of building a sustainable, addictive, future market.

- Data: expect data, particularly in the non-static sense of flows of information, to become increasingly valuable, and increasingly ‘owned’ and protected. Data vaults, telemetry analysis, and provenance are increasingly important. By definition for LLMs are the data, brute force data for the most part has driven them. But we’re close to reaching the limits of progress and creativity as we approach the limits of data.

Where do we want to go?

I’m not sure we want to go anywhere specific, rather to take a journey, but we are all tugging at the wheel to change direction. I would argue that we may well have disagreements of deep faiths and beliefs, almost religious wars ahead.

The essential ingredient for disagreeing agreeably is doubt. The Danish philosopher Søren Kierkegaard noted that to have faith, one must also have doubt. To have tolerance of other ideas and viewpoints, one must have doubt: to accept the possibility that you are wrong, and the other person is right. Scientific progress is grounded in doubt – to recognise that what we currently believe may be wrong.

Doubt opens the mind to new theories, new discoveries, and new solutions. And creativity comes from doubting this is the best we can do. Doubt unites faith and science. By embracing doubt, we make progress – tolerance, doubt, faith, science, creativity.

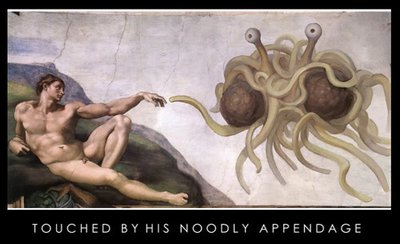

For example, I am a devout Pastafarian, adhering to the Church of the Flying Spaghetti Monster, having been ‘touched’ by His "Noodly Appendage". The Flying Spaghetti Monster was created in 2005 by Bobby Henderson in a satirical open letter to the Kansas State Board of Education. His Holiness was created to protest the Board of Education’s decision to teach ‘intelligent design’ as an alternative to evolution in public school science classes. Henderson argued that if intelligent design was taught, it was equally logical to teach that the universe was created by a "Flying Spaghetti Monster", an invisible, undetectable deity that created the universe while heavily intoxicated.

How are we going to get there?

I think there may be two schools of faith, quite similar to the sorts of troubles that inspired the creation of the Flying Spaghetti Monster. One group, and I would place much of the neural net community there, almost believe in the primordial ooze evolving, finally sparking something to life, but we don’t really have to think about it. It will evolve from where we are without us doing much but adding to the pile of ooze and sparking it from time to time.

The other school of faith resembles the Intelligent Design community. The Six Million Dollar Man (1970s TV series) ran: "Gentlemen, we can rebuild him. We have the technology. We can make him better than he was. Better ... stronger ... faster". To do this we have to have an overarching theory of intelligence and more, let alone ways to build it.

What paths might we take?

Primordial Ooze community paths rely on increasing complexity leading to emergent properties:

1 – more data in bulk – though hard to see where that will come from, or adding data that may lacking, such as experiencing the world as humans do, bouncing, jumping, falling, etc. Little robot dolls that feed data into systems so AIs can have similar experiences to us growing up, or via robots in the wild;

2 – more raw power in chips and substrates;

3 - using LLMs to design AI.

Intelligent Design community paths look to design new alien life forms:

1 – somehow intelligence is mathematically calculable, and symbolic logic approaches such as neuro-symbolic AI succeed;

2 – full out emulation in hardware of artificial neural networks in hardware, or more - I’m aware of a project seeking, with great ethical concern, to launch human brain organelles into space to recreate neural networks at scale cheaply;

3 – quantum calculations approaches, and new substrates for calculating at levels undreamt of.

My view? A bit of both. I think we need to move up a level and consider LLMs and symbolic approaches both interacting and learning from each other, combined with more specialised hardware built for the task. To me, it’s a multi-agent future. Rather similar to human society. Clawdbot, Moltbot, and OpenClaw show perhaps early promise. The Z/Yen community over the decades has explored genetic algorithms, GANs, agent-modelling, and even combative dialogue LLMs in 2023. Multi-agent approaches lend themselves to evolution, but the ecosystems in which they perform will be very complex.

If multi-layered, interlaced, complex models are the future, then we need to truly up our ability to analyse networks in rigorous ways – network theory on steroids. But then so we come full circle - “the next 30 years of research will be spent trying to figure out how they’re doing what they’re doing”.

So beyond LLMs lies not salvation but curiosity: more doubt, better theories, fewer hallucinations—and eternal funding proposals explaining why, after thirty years, we’re still asking why the machine just did what it did.

![[co-created with ChatGPT]](https://www.longfinance.net/media/images/Primordial_Ooze_vs_Intelligent_Design.width-400.png)